An overview of cloud buzz words

As this Dilbert comic eloquently illustrates, there’s no shortage of cloud buzz words thrown around today. But as strange and complex as they may sound (even to seasoned developers and system administrators), they are not difficult to understand. In this blog post, I’ll walk through the different components and features of the cloud, explaining the associated buzz words along the way.

What is the cloud?

Back in the early 1990s, when the US government sold off parts of their ARPAnet and NSFnet to private companies (later called Internet Service Providers, or ISPs) to create the public Internet, there wasn’t anything exciting about the Internet at all. After all, the Internet was just a physical computer network that allowed your computer to talk to other computers, usually through a telephone network via a modem.

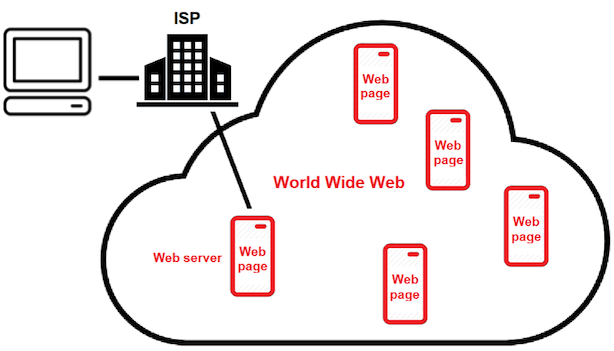

The killer app on the early Internet was the webpage. Anyone with Internet access could install a Web server that would hand out webpages to any other computer on the Internet with a Web browser. And the world wide collection of public Web servers was called the World Wide Web (WWW).

More importantly, this is where the free and open source Linux operating system and Apache Web server rose to prominence. Nearly every Web historian will tell you that without Linux and Apache, the Web wouldn’t have reached mainstream popularity in the 1990s (or at all). Just imagine how many people would have installed a Web server in the 1990s if it cost a lot of money. Consequently, nearly all Web frameworks and technologies since the 1990s revolved around Linux and open source development.

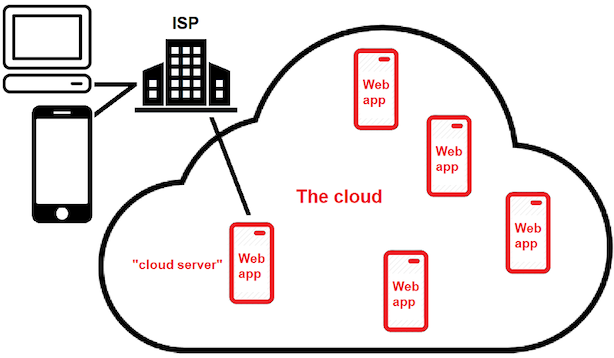

Today, Web servers host complex Web apps instead of static webpages, and these Web apps are accessed by a wide variety of different devices. The Web servers that host these complex Web apps are called cloud servers and the worldwide collection of cloud servers is called the cloud. Thus, the cloud is just the new term for the World Wide Web in today’s day and age.

For quick performance, most Web apps are hosted on cloud servers within datacenters that have very fast connections to the backbone of the Internet. The companies that own these datacenters are called cloud providers, and they all have a product name for their cloud datacenters (e.g. Amazon Web Services, Microsoft Azure, or Google Cloud Platform).

Getting Web apps to the cloud

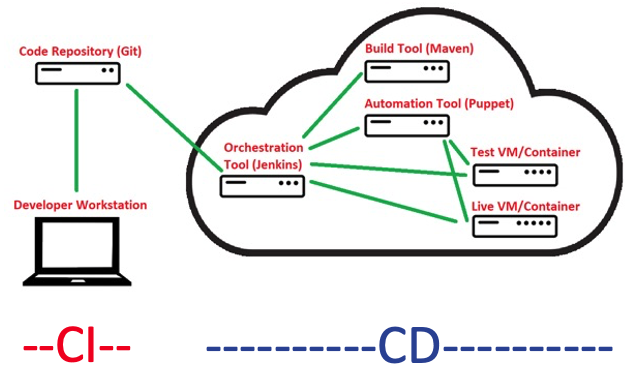

The developers that create Web apps need a way to get their apps to the cloud for testing and deployment. And this process must happen on a regular basis (sometimes many times per day) in order to fix bugs and add new features. In the early days of cloud computing (10 years ago), this may have involved the components shown in the following diagram:

Developers often worked collaboratively, storing the code for their Web app in a central code repository on a server. This server ran version control software (such as Git) that allowed changes to be coordinated, approved and merged together (a process called Continuous Integration or CI ).

When a new change was approved, an orchestration tool (such as Jenkins) would pull that code into a cloud server, compile it with a build tool (such as Maven), and then use an automation tool (such as Puppet) to deploy a virtual machine (VM) or container with the Web app for testing. If the Web app functioned as desired, the test VM/container would replace the live VM/container and users on the Internet would immediately have access to the new version of the Web app. This process was called Continuous Deployment or CD.

The systems administrator that installed and managed these components was called a devop (system operator that supported development), and the entire process (CI & CD) was called a devops workflow.

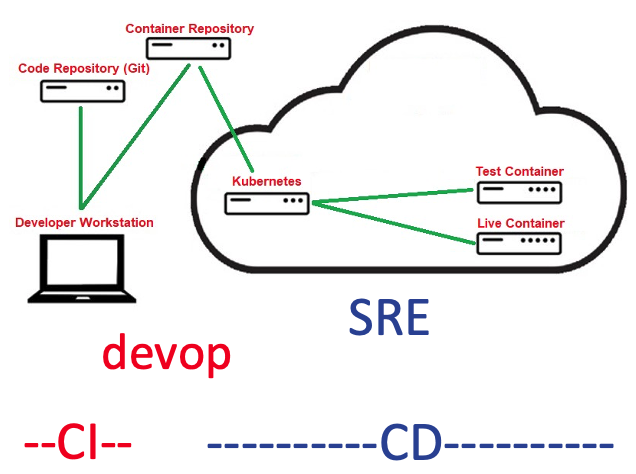

This process has evolved tremendously over the years, and nowadays developers perform many of the tasks that were previously performed by devops (a process called shifting left). Today a devops workflow typically involves the components shown in the following revised diagram:

We still have CI, and it almost always involves Git (instead of other version control systems). However, Web apps are almost always deployed in containers instead of VMs, and developers build their Web apps into containers for local testing before pushing them to a container repository where they can be pulled into the cloud via Kubernetes. Kubernetes (also called K8s) is the most common orchestration and automation tool today (although it can be used alongside other automation tools like Ansible). It can deploy and manage both test and live containers, as well as scale them to run on many different servers as needed.

The term devop now refers to a developer that builds a containerized Web app and deploys it to the cloud, whereas Site Reliability Engineer (SRE) is used to describe the systems administrator that manages the containerized Web app once it reaches the cloud. SREs often fix problems with Web apps once they are running in the cloud, as well as configures and mange the other components in a cloud datacenter (storage, networking, authentication, security and so on).

Three other recent terms are also worth noting:

- Gitops refers to CD that is performed automatically via instructions that are part of the Web app code. GitHub Actions and GitLab Runners are two common Gitops systems today. A developer simply adds an extra text file (in YAML format) that specifies the appropriate CD configuration to their Web app code stored in the GitHub or GitLab code repository to have it automatically deployed on a cloud provider when changes are made.

- Devsecops is a term used to describe the incorporation of security measures in all CI and CD components (because security is important nowadays, and cool to brag about as well).

- Infrastructure as Code (IaC) refers to any server, network, or container configuration that is centrally stored in a text file and used for automation purposes. All orchestration and automation tools implement IaC.

How Web apps are hosted on a cloud provider

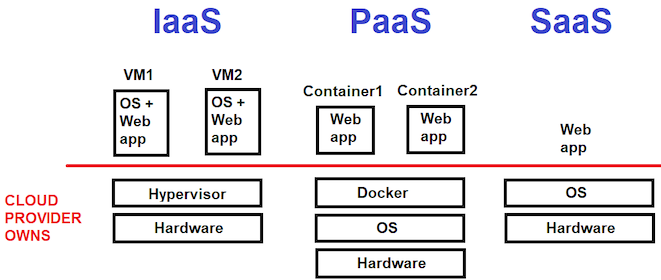

There are 3 different ways that you can run Web apps on a cloud provider. They are called cloud delivery models and illustrated below.

- If you install a whole operating system (OS) to run your Web app within a VM on a cloud provider’s hardware infrastructure, it is called Infrastructure as a Service (IaaS). VMs have been commonly used in companies for over a decade to run multiple OSes on a single server using hypervisor software. IaaS is essentially just paying to run your VMs on a cloud provider’s hypervisor.

- If you run a small container with your Web app on a cloud server, it is called Platform as a Service (PaaS). Containers are similar to VMs, but only contain the parts of the OS that the Web app needs, and do not contain an OS kernel. As a result, containers must run on an underlying OS platform (with a kernel) alongside a container runtime (e.g. Docker). PaaS is the most common cloud delivery model used today, as you can run far more containers on the same hardware as you could VMs, making it far more cost effective.

- If you run a Web app on a cloud server in any way (including running it directly in an OS on a cloud server), it is called Software as a Service (SaaS). Any Web app running in the cloud (even via IaaS and PaaS) can be referred to using SaaS.

What are containers exactly, and why are they good?

On a technical level, containers are OS virtualization. You reserve a portion of an underlying operating system and call that portion a separate OS. It gained prominence with BSD UNIX in 2000 (a feature called BSD jails), and Linux followed suit shortly thereafter. Microsoft even supports containers in Windows 10 / Server 2016 and later. To learn more about how containerization works, watch Liz Rice create containers from scratch: https://www.youtube.com/watch?v=8fi7uSYlOdc

The reason containerized Web apps are so popular is that different parts of your Web app can run in different containers, making it easier to develop, fix and evolve. For example, you could create a Python Web app in one container that works alongside a JavaScript Web app in another container to provide a specific functionality. And both of these Web apps can store their data in a backend MySQL database running in yet another container. Thus, to fix a bug in your Python code, you only need to modify and redeploy the Python Web app container. Because of this, we often call containerized Web apps microservices.

Individual containers can also be flexibly scaled to service more client connections. For example, you can choose to run a copy of your JavaScript Web app container for each client connection, a copy of your Python Web app container for every 20 connections, and only one copy of your MySQL Web app container regardless of the number of connections. Since public cloud providers have ample hardware in their datacenters to scale your microservices thousands of times easily, they are also called hyperscalers.

By keeping each component as small as possible, and combining multiple components to form larger services, containerized Web apps are a direct implementation of the UNIX philosophy (https://en.wikipedia.org/wiki/Unix_philosophy) that has stood the test of time, again and again.

Container Runtimes & Kubernetes

Recall that you must have a container runtime to run and manage containers on your operating system.

- LXC is one of the earliest container runtimes available for Linux. It is often used by system administrators today to obtain containers that have pre-configured services (VPN servers, ticketing systems, security appliances, etc) on existing systems within the organization (on-premises or cloud).

- Docker is the most popular container runtime today, and often used by developers to create and test containerized Web apps locally before pushing them to a container repository and running them in the cloud.

- Podman is a more recent alternative to Docker that is more lightweight.

- CRI-O (Container Runtime Interface for the Open Container Initiative) is the container runtime that was created specifically for Kubernetes.

- containerd is a CRI-compliant container runtime that is also supported by Kubernetes. Docker uses containerd under the hood to ensure that containers that developers create using Docker can be run on Kubernetes.

Kubernetes natively supports CRI-O and containerd. Developers typically use Docker or Podman to create Open Container Initiative (OCI) compliant container images that work on any container runtime, including Kubernetes. Web apps in an OCI compliant container can run on any public cloud provider and are called cloud native as a result.

To learn how to work with containers, install Docker Desktop for Windows or macOS and navigate the built-in tutorial that guides you through how containers are created and run. Docker Desktop is free for personal use and runs the Docker container runtime in a Linux VM (on macOS) or the Windows Subsystem for Linux (on Windows). If you want to learn how Kubernetes works, Docker Desktop also comes with a small Kubernetes cluster that you can use to deploy your containers to locally.

Alternatively, you could install minikube on Windows or macOS to learn more about containers and Kubernetes. Minikube is a completely free and open source drop-in replacement for Docker Desktop that can use the Docker or Podman container runtimes. If you are already comfortable with containers and just want to learn Kubernetes, I recommend installing kind on a Linux system (which runs Kubernetes in a container).