Fundamental computer science concepts every software developer should know

To be a software developer (a.k.a. programmer or software engineer) today, you don’t need a degree in computer science. In fact, computer science really isn’t about “how” you develop software today at all. It’s about how we approach software problems theoretically. And to be frank, you don’t need to worry about computer science theory to be a good software developer today. You just need to know how to solve problems using code, be good at using existing software frameworks, as well as know how to Google stuff and read programming language documentation.

This is why many of the largest tech companies these past few years have publicly announced that they don’t require computer science degrees for any of their software developer jobs: https://www.glassdoor.com/blog/no-degree-required/

However, there are some essential computer science concepts that every software developer should know. While they don’t help you develop software per se, they allow you to get better at visualizing the performance and nature of software problems, as well as allow you to understand the terminology that coworkers with computer science degrees will use in these regards. More specifically, you should understand the following in computer science lingo:

- The difficulty/complexity of a problem

- How an algorithm scales at its inputs scale

Before we dive into these concepts, I’ll start with a basic history of computer science. Because, you should also be able to discuss the origins of your profession!

History of computer science

Charles Babbage and Ada Lovelace

Computer science actually started in the 1840s. Charles Babbage designed (but didn’t fully implement) a machine called the Difference Engine that could compute information. He later evolved this design into the Analytical Engine, which would accept information on punch cards for computation. Ada Lovelace (a.k.a. The Countess of Lovelace) developed an algorithm that allowed the Analytical Engine to calculate a sequence of Bernoulli numbers (the first software program). She also postulated other uses for the Analytical Engine as well!

Unfortunately the Analytical Engine never became a reality, and the work by Babbage and Lovelace was largely forgotten until a decade later.

Alonzo Church and λ calculus

In the 1930s, Alonzo Church invented Lambda (λ) calculus and set the stage for computing in the field of mathematics. In λ calculus, there are only functions. There are no numbers or data types, and calculation is performed by reduction (much like what the Analytical Engine did).

For example, here is a function that multiplies x by x:

λx.(x * x)

Thus, if you feed this function the number 5, it produces 25:

λx.(x * x)(5) //outputs the number 25

In JavaScript, this would be:

let square = x => x * x

square(5) //outputs the number 25

It was also universal - any mathematical problem could theoretically be solved using λ calculus. As an aside, Y Combinator (which provides seed funding for startups in Silicon Valley and also runs Hacker News) gets its name from the Y combinator in λ calculus, which is a recursive function that finds the point where the function output equals the function input.

Alan Turing and the Turing Machine

In the 1940s, Alan Turing applied λ calculus to prove that a machine could be used to compute almost ANY algorithm. This machine was called a Turing Machine, and needed to have:

- conditional branching

- loops

- variables and memory/storage

Moreover, he drew attention to the fact that Turing Machines could be run within other Turing Machines. Programs (which run within an operating system program), virtual machines, and containers are examples of this today. He also further defined many other types of Turing Machines, such as Finite State Machines and Pushdown Machines (Google them if you want to know more). Anything that can simulate a Turing Machine is said to be Turing-complete (most modern programming languages are Turing-complete).

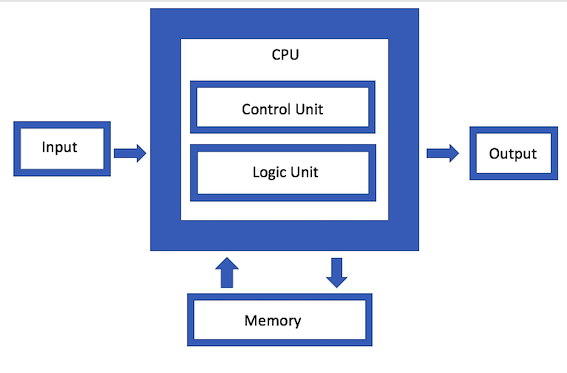

John von Neumann and the von Neumann architecture

Also in the 1940s, John von Neumann laid the groundwork for a modern Turing Machine architecture that would later be used by all computers (even those today):

ENIAC and EDVAC

The first general purpose computer (Turing Machine) that could solve nearly any mathematical equation through programming was created by J. Presper Eckert and John Mauchly in 1945 and called ENIAC (Electronic Numerical Integrator and Computer). They followed this success up with the EDVAC (Electronic Discrete Variable Automatic Computer) computer in 1949. EDVAC computed data in binary (not decimal like ENIAC) and contained the memory/storage needed to implement the von Neumann architecture.

EDVAC led to mainframes, which led to more people creating programs (Ada Lovelace-style) and the formalization of software development tools and techniques in the 1960s onwards!

How difficult or complex is a problem in computer science?

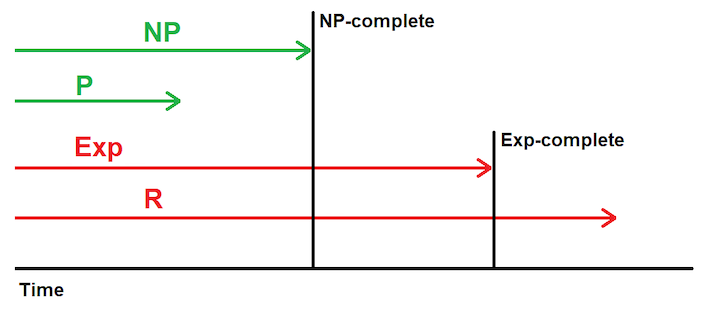

It’s important to understand how to discuss the difficulty or complexity of software development problems. In computer science, we define complexity not in the number of lines of code or the amount of caffeine needed to produce the code, but in the time it takes for a Turing Machine to solve it.

-

P (polynomial time) is the time to solve a problem using a polynomial expression (involving variables, coefficients and operators). Since computers can solve the same polynomial in the same period of time, so we say that polynomial time is deterministic. Most simple problems are said to be solvable in P.

-

Exp (exponential time) describes complex problems that take much longer to solve (or may never be solved), depending on the input and nature of the problem itself. They could take a few seconds to solve or a few years to solve, depending on the input. If the solution can be verified as “optimal” using a true/false polynomial expression, then we say the problem is Exp-complete. If we can’t verify it or must verify it using reduction, we say it is Exp-hard. R (recursive) problems are an example of Exp-hard.

-

NP (non-deterministic polynomial time) is what we strive for when it comes to solving complex Exp problems. Think of NP as a shortcut that you take when solving an Exp problem (i.e. a stroke of inspiration) that allows you to solve it much quicker. If you are able to use a true/false polynomial expression to verify that your solution was the optimal one, then we say the problem is NP-complete. Otherwise we say it is NP-hard (i.e. we’ll assume it was the optimal one by reduction, or just look the other way). Tetris is an example of an NP-complete problem. You can solve each line quickly using a stroke of inspiration, and later watch a recording of your gameplay to determine if it was the optimal solution at the time (or write code to verify it using polynomial expressions).

The relationship between P, Exp and NP is shown below:

How does an algorithm scale as its inputs scale?

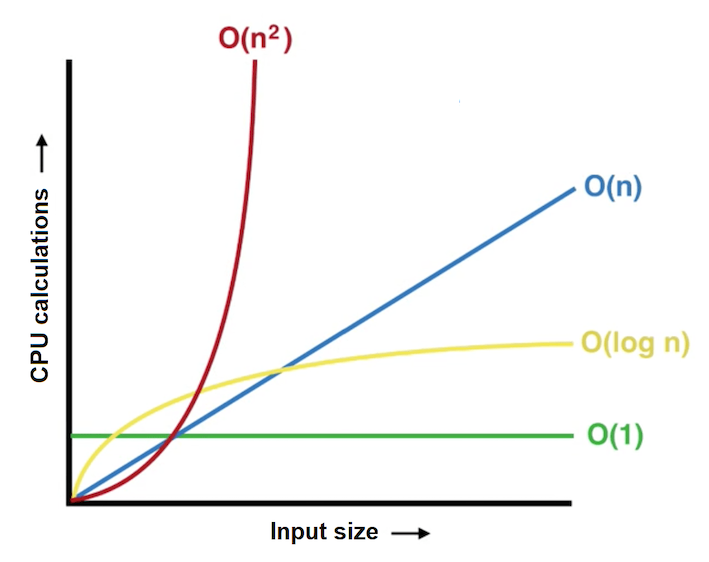

Another important concept you should be able to discuss concerns the nature of algorithms. In computer science, we use Big-Order (or Big-O) notation to describe how many CPU operations it takes to solve an algorithm based on the inputs that are provided to the algorithm.

- O(1) means that the algorithm takes the same number of CPU operations to solve regardless of the input.

- O(n) means that the number of CPU operations scales linearly as the input is increased.

- O(n²) means that the number CPU operations scales exponentially as the inputs are increased (this should be avoided for obvious reasons). For example, an iterative search is an example of O(n²).

- O(log n) means that the number of CPU operations scales logarithmically as the inputs are increased. For example, a B-tree search is an example of O(log n). O(n²) algorithms should be restructured to be O(log n) whenever possible.

The relationship between these Big-O algorithms is shown below: