Kubernetes Quickstart

Over the past two decades, we’ve shifted from running servers bare metal to running them almost exclusively within virtual machines (VMs). More recently we’ve seen another shift to running servers within containers. Like VMs, containers are servers that contain a unique filesystem, IP stack and software. But unlike VMs, containers do not contain a full operating system or operating system kernel. Instead they rely on an underlying operating system that runs the container using a container runtime, such as Docker. Consequently, containers are much smaller than VMs and ideal for running software within cloud environments where resource utilization must be kept to a minimum to control costs.

Containers have become so common today that my two most recent Cengage books contain a chapter that covers how to run and manage them:

- Linux+ and LPIC-1 Guide to Linux Certification - 5th Edition (ISBN: 978-13375-6979-8)

- Hands-On Windows Server 2019 - 3rd Edition (ISBN: 978-0-357-43615-8)

While I cover the Docker container runtime in depth within these books, I only touch upon how containers are managed and scaled in the cloud using the Kubernetes orchestration software. This is because Kubernetes is huge, and really needs an entire textbook to cover properly.

However, I frequently get asked questions about Kubernetes from readers and students, so this blog post provides an incredibly oversimplified quickstart guide that - at the very minimum - should give you an idea of how Kubernetes works in general, and how powerful and extensible it really is.

So, how can we actually do a Kubernetes quickstart in a single blog post? Well, I’ve thought about it for a while, and I think the best approach is to leverage a small number of software tools that automate much of the difficult configuration so that we can focus on core Kubernetes-related topics:

- Docker Desktop (which includes the Docker container runtime)

- Minikube (a pre-configured single-node Kubernetes cluster that typically runs within a virtual machine)

kubectl(the main command used to interact with Kubernetes - it’s official pronunciation is cue-bee-cuttle)- Helm (a package manager for Kubernetes)

- Lens (a visual management and monitoring tool for Kubernetes)

- Prometheus (a Kubernetes data collection tool)

- Grafana (a tool that can interpret and visually represent the data collected by Prometheus)

Let’s get started!

1. Working with containers

Before running a Linux container on your macOS or Windows system, you need to have an underlying Linux operating system. On macOS, Docker starts a Linux VM using Apple’s Hyperkit framework in order to run the container. On Windows, Docker will first start the Linux operating system built into the Windows Subsystem for Linux (WSL2). If you are using Windows and don’t already have WSL2 installed, open the Windows Terminal (Admin) app on your Windows system and run the following two commands:

wsl --install

wsl --set-default-version 2

Next, install the Docker Desktop app on your macOS or Windows system from https://www.docker.com/products/docker-desktop. Docker Desktop automatically installs the Docker container runtime (containerd) and automatically starts it when you start the Docker Desktop app. While we can install the Docker runtime without installing Docker Desktop, the Docker Desktop app is an easy way to see, manage, and troubleshoot the Docker runtime.

Following this, start the Docker Desktop app but close the app window afterwards. The Docker container runtime will continue to run in the background and you can interact with it from the Docker icon in your notification area (Windows) or menu bar (macOS), as shown here on my macOS system:

Now open the Terminal app on your macOS system or the Windows Terminal (Admin) app on your Windows system and run the following commands to download the pre-built Apache (httpd) Linux container image from the Docker Hub online repository and view your results:

docker pull httpd

docker images

To run a copy of the Apache Linux container image with a name of webserver, map port 80 in the underlying macOS/Windows operating system to port 80 in the container, and view your results, you can run the following commands:

docker run -d -p 80:80 --name webserver httpd

docker ps

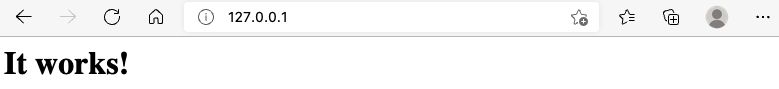

Next, open your Web browser and navigate to http://127.0.0.1 to view the default webpage in your container:

After this, run docker stop webserver to stop running your container. Developers often start with a pre-built container image, add their Web app to it, and then push that image to Docker Hub. To illustrate this process without creating an actual Web app, let’s create another container image based on httpd, but with a different webpage.

Before you begin, create a free account on Docker Hub (https://hub.docker.com) and follow the instructions to create a free public repository called webapp. Next, create an access token (password equivalent for uploading to your Docker Hub repository) by navigating to Account Settings > Security > New Access Token.

Now, create a directory on your system called webappcontainer that includes an index.html text file with the following contents:

<html>

<body>

<h1>This is a custom app!</h1>

</body>

</html>

Also create a Dockerfile text file within the same directory that contains the following lines:

FROM httpd

COPY ./index.html htdocs/index.html

Next, run the following commands to create a new image called webapp (based on httpd but with the new index.html from the current directory), view your new image locally, and then upload it to your Docker Hub repository. Make sure you replace jasoneckert with your own Docker Hub username.

cd webappcontainer

docker build -t jasoneckert/webapp .

docker images

docker login -u jasoneckert #Paste your Docker Hub access token when prompted!

docker push jasoneckert/webapp:latest

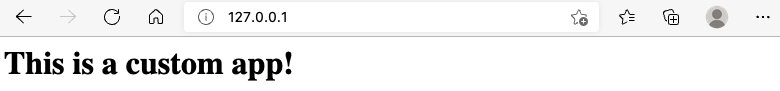

To verify that your container serves the new webpage, run docker run -d -p 80:80 --name webapp jasoneckert/webapp and then navigate to http://127.0.0.1 in your Web browser. Run docker stop webapp when finished.

2. Creating a simple Kubernetes cluster to run containers

Now that we have a Web app container on Docker Hub, we can pull that into a Kubernetes cluster easily and run it any way we like. While Docker Desktop comes with a built-in Kubernetes cluster, it has limited features designed for testing local Web apps only. So, we’ll install Minikube and the kubectl command. On macOS, the easiest way to install these is using the Homebrew package manager (https://brew.sh), and on Windows you can use the equivalent Chocolatey package manager instead (https://chocolatey.org). After you’ve installed Homebrew or Chocolatey, you can run commands to install packages. Since I’m using macOS, I’ll use brew commands and list the equivalent choco commands for Windows in brackets.

You can use the following commands to install Minikube and kubectl, as well as start a Minikube Kubernetes cluster and display information about it:

brew install minikube (or choco install minikube on Windows)

brew install kubectl (no need to do this on Windows, as kubectl is installed with Minikube)

minikube start

kubectl get nodes

The configuration used to start/manage/scale a container is called a deployment in Kubernetes. To create a deployment for our Web app called webapp, and access it on your local system, you can run the following:

kubectl create deployment webapp --image=jasoneckert/webapp:latest

kubectl expose deployment webapp --type=NodePort --port=80

minikube service webapp

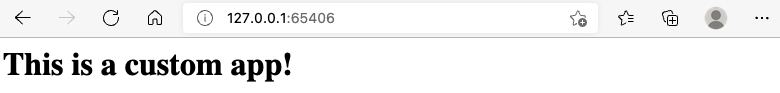

The kubectl expose deployment command shown above creates a service to expose the deployment (NodePort exposes the port on every cluster node). The minikube service command shown above tells Minikube to connect a tunnel to the exposed service within the VM that contains Kubernetes from your local system using a random port (in my case it was 65406) and open it in your default Web browser:

If you want to see the IP address of the VM Minikube created for Kubernetes, you can use minikube ip, or you can use minikube stop to stop the VM entirely. If there are issues with your deployment, kubectl get deployment will indicate that 0 images are ready or available. You can then use kubectl describe pod -l app=webapp to find out why. A pod is the smallest unit of execution in Kubernetes - it can consist a single container, but often consists of multiple containers that work together to provide a Web app or service.

3. Scaling containers

Kubernetes treats all containers that comprise a deployment as a traffic-balanced unit that can scale. To ensure that you have 3 pods for redundancy in case a single pod running your Web app goes down, you could run the kubectl edit deployment webapp command (which opens the deployment configuration in your default text editor, set Replicas=3 and save your changes. Afterwards, you can verify that Kubernetes has automatically scaled up your Web app by running the following commands:

kubectl get deployment webapp

kubectl get pods -l app=webapp

kubectl logs -f -l app=webapp --prefix=true

To see detailed information regarding session affinity and load balancing, you can use the kubectl get service webapp -o yaml command.

Kubernetes can also perform autoscaling of pods based on the amount of traffic going to your Web app - this is called horizontal pod autoscaling (HPA). To do this, you must first install the metrics server. Normally this is installed using Helm (discussed later), but if you are using Minikube, you can run the following commands to view and install the metrics server:

minikube addons list | grep metrics-server (or minikube addons list | select-string metrics-server on Windows)

minikube addons enable metrics-server

After a few minutes, you can run kubectl top nodes to view statistics that are collected. Next, you can use the following to create and view a HPA configuration for your Web app that automatically scales from 1 to 8 pods when a consistent trend of more than 50% of the CPU is consumed:

kubectl autoscale deployment webapp --min=1 --max=8 --cpu-percent=50

kubectl get hpa webapp

4. Upgrading and downgrading container images in production

After a while, your developers will make a new container image available (e.g. jasoneckert/webapp:2.0 on Docker Hub). To update your Kubernetes cluster to use the new image in production, you can either:

- Edit your deployment (

kubectl edit deployment webapp), modify theImageline and save your changes, or - Run

kubectl set image deployment/webapp webapp=jasoneckert/webapp:2.0

Kubernetes will immediately start replacing the pods with your new image in sequence until all of them are upgraded. If an upgraded image causes stability issues, you can revert to the previous image using kubectl rollout undo deployment webapp. You can also use kubectl rollout history deployment webapp to view rollout history.

5. Providing external access to your Web app using ingress

Up until now, I’ve had to access the pods that comprise my Web app using http://127.0.0.1:65406 from my local system via a Minikube tunnel. In order to provide external access to these pods, you need to set up either a load balancer or an ingress controller. To use a load balancer, you just need to change from using NodePort to LoadBalancer and pay for the load balancing service on your cloud provider. The cloud provider then gives you an external IP that you can use when creating a DNS record for your Web app. Alternatively, you can use an ingress controller proxy (e.g. Nginx, HAProxy or Traefik). In this method, you configure a node balancer in front of your cluster that routes traffic to Nginx/HAProxy/Traefik and then your Web app.

Let’s install an Nginx ingress controller in our Kubernetes cluster and configure it to allow external access to our Web app. The easiest way to install additional components in a Kubernetes cluster is by using the Helm package manager, which uses helm charts to store the information needed to add/configure the appropriate software. There are many different repositories on the Internet that provide helm charts for different Kubernetes components. The following commands install the Helm package manger, add the Nginx Helm repository, and install the Nginx ingress controller:

brew install helm (or choco install kubernetes-helm on Windows)

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

helm install ingress-nginx ingress-nginx/ingress-nginx

At this point, you can normally use the kubectl get service ingress-nginx-controller command to get the external IP address of your Web app (typically a public IP provided by the cloud provider), but because we are running Kubernetes in a Minikube VM, we must first run minikube tunnel to provide external access to the VM (this will also prompt you to supply your macOS/Windows user password). Following this kubectl get service ingress-nginx-controller should display an external IP of 127.0.0.1 because we’re using Minikube (which is designed for local access only).

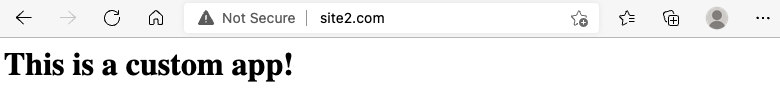

To emulate DNS resolution, you can edit your /etc/hosts file (sudo vi /etc/hosts on macOS, or notepad C:\Windows\System32\drivers\etc\hosts on Windows) and append site2.com to the line that starts with 127.0.0.1, saving your changes when finished.

Next, create a webapp-ingress.yml text file in your current directory that contains the following lines:

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: webapp-ingress

namespace: default

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- host: site2.com

http:

paths:

- path: /

pathType: ImplementationSpecific

backend:

service:

name: webapp

port:

number: 80

This file is called a manifest file. Manifests are just JSON- or YAML-formatted text files that contain configuration information that you can apply to your Kubernetes cluster. This particular manifest creates an ingress service that links Nginx to our webapp on port 80.

To apply this manifest, run the kubectl apply -f webapp-ingress.yml. Next, you can run kubectl get ingress to view your ingress configuration and enter http://site2.com in your Web browser to access your Web app:

Note that you can still access the Web app using http://127.0.0.1:65406. To prevent this (i.e. force everyone to access the Web app via the Nginx ingress controller only), simply edit your service (kubectl edit service webapp) and change NodePort to ClusterIP.

6. Using graphical tools

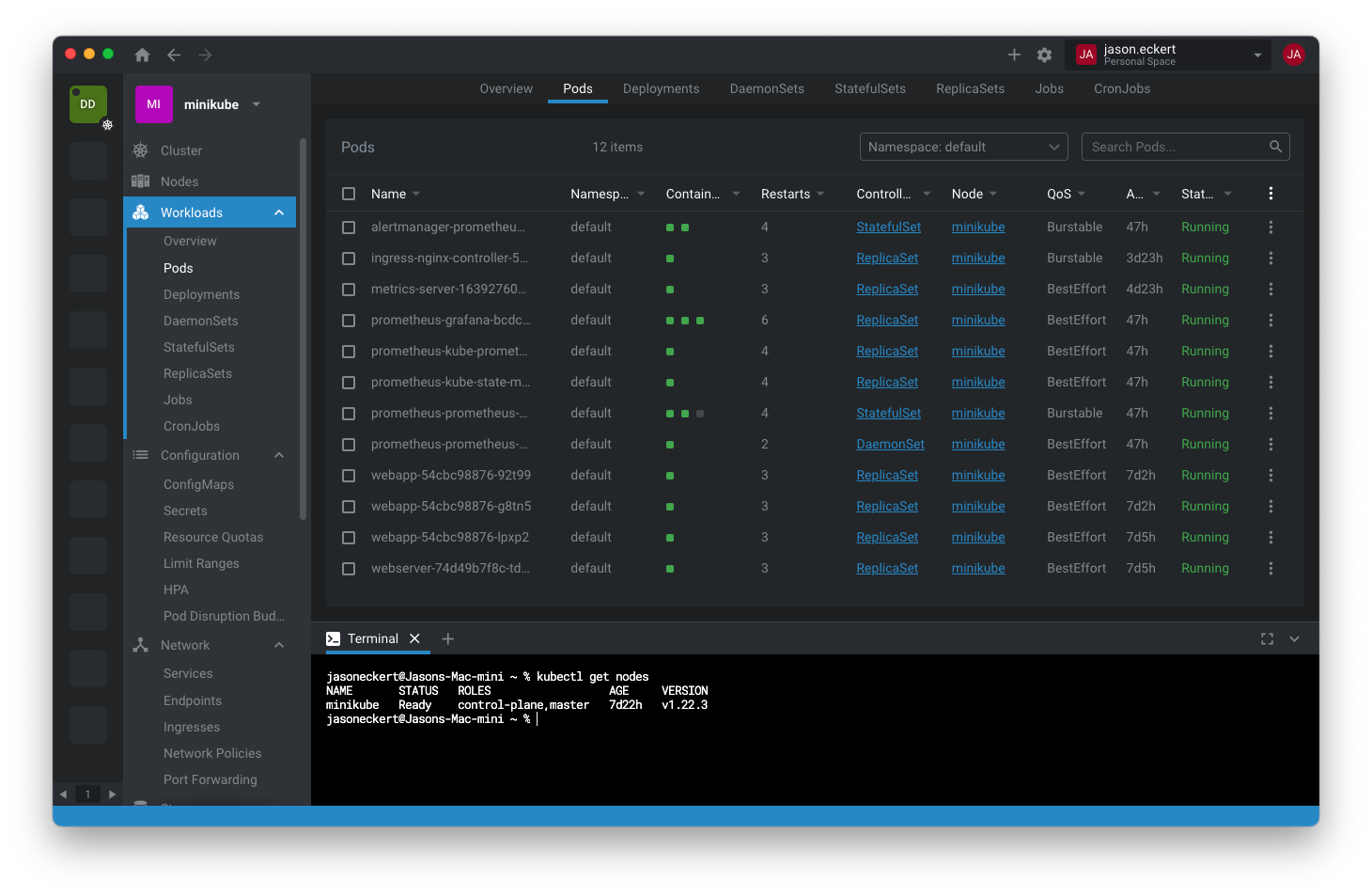

You can also use graphical apps to monitor and manage Kubernetes. The most common app for this is Lens (https://k8slens.dev), which is quite powerful. Unfortunately, it can also be quite daunting for those new to Kubernetes as it can view/edit all of the core concepts (deployments, services, HPA, etc.) as well as all of the advanced ones that I didn’t mention in this blog post.

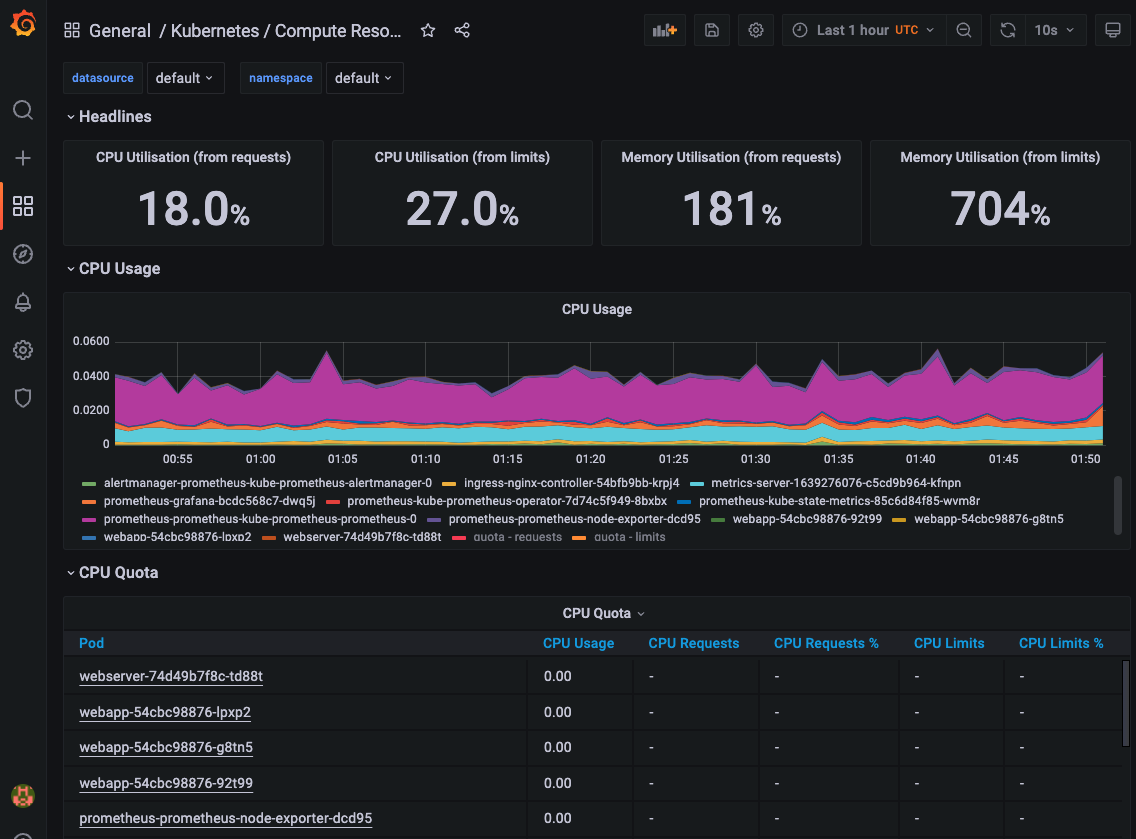

Most administrators use a combination of command line tools and templating tools (e.g. Terraform) for managing Kubernetes, but use graphical tools for monitoring it. There are many cloud-based monitoring tools (e.g. Datadog) that can be integrated into Kubernetes for a fee, as well as free tools that you can install directly in your Kubernetes cluster, such as Prometheus and Grafana. Prometheus monitors the events in your cluster and sends the data to Grafana for visualization. Even the graphs and metrics shown in the Lens app require Prometheus (they are empty otherwise).

To install both Prometheus and Grafana, you can install the Prometheus stack using a helm chart:

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo update

helm install prometheus prometheus-community/kube-prometheus-stack

This will install a series of pods, including a pod called prometheus-grafana. Since these pods take several minutes to start, watch the output of kubectl get pods periodically to know when they are ready. Next, open the Lens app, locate the prometheus-grafana pod, and scroll down until you find the URL with port 3000. Click the appropriate button/link to set up a port forward (this opens your default Web browser to access it much like minikube service does). Alternatively, you could run the following commands to expose the service:

kubectl expose service prometheus-grafana --type=NodePort --target-port=3000 --name=pgservice

minikube service prometheus-grafana

Next, log into the Grafana Web app as the user admin (default password is prom-operator) and view the different available monitoring templates by navigating to Dashboards > Browse (click on each one). Following is the compute resource (pod) dashboard:

You should also start seeing stats for the pods in the Lens app now that Prometheus is running too!

7. Where do I go from here?

If you’ve browsed around the Lens app, you’ve probably noticed that we’ve only scratched the surface of Kubernetes configuration, and only to provide a very rough proof-of-concept style overview only. There’s a lot more that you’ll likely want to know at this stage, and you should now have enough basic knowledge to search for the relevant information in the Kubernetes documentation: https://kubernetes.io/docs/home/

Here are some additional core concepts that you can explore further:

- You can integrate Kubernetes directly with a DNS provider (~Dynamic DNS) using https://github.com/kubernetes-sigs/external-dns

- For HTTPS/TLS, you can connect your ingress controller to https://cert-manager.io so that it can automatically get certificates for each service. You can install it using Helm or a downloadable manifest you can apply (see instructions on the website for details on either method). Next, follow the instructions to connect to a CA (e.g. Letsencrypt) and modify your ingress controller settings to list cert-manager. Of course, you’ll also need a publicly-resolvable DNS record for your cluster for this to work.

- Cronjobs are often used by Web apps to do things like reindexing DBs, maintenance tasks, clearing caches, and so on. Kubernetes has a CronJob resource that can do this. Simply create a manifest that lists the cron schedule, as well as a container it can spawn (e.g. Alpine/Busybox) to perform the commands you specify.

- For persistent block storage needed by containers, you need to create a PVC (Persistent Volume Claim) to create a resource that doesn’t disappear when you restart your cluster. Many block storage volumes can only attach to 1 pod at time, which makes it difficult to scale. Rook/Ceph and GlusterFS can do this but are complex to configure. NFS is a simple method that can be used to access persistent block storage. To use NFS, install and configure the NFS Client Provisioner and configure it to connect to an NFS share on another container or NFS server. If the block storage is only used for hosting databases, there are many different persistent and cloud native solutions (e.g. CockroachDB) available on the market that may be worth the money depending on your use case.

- Namespaces limit the scope of resources in Kubernetes. I’ve been using the default namespace for everything so far, but you typically create namespaces for related resources that comprise a Web app. You can use

kubectl create namespace lalato create a lala namespace, and add-n lalato otherkubectlcommands to limit their functionality to that namespace. If you runkubectl delete namespace lala, all resources associated with that namespace will also be deleted.