Microsoft ReFS vs Oracle ZFS - FIGHT!

In the past, most IT admins have put their faith in systems and SANs (Storage Area Networks) that use RAID (Redundant Array of Inexpensive Disks) technology. RAID can be used to combine hard disks together into simple volumes (called RAID-0, or JBOD) that aren’t fault tolerant, but can also be used to create mirrored volumes where both drives are identical in case one fails (called RAID-1), or striped volumes with parity where data is written across several disks with parity information that can be used to calculate the missing data if a drive fails (called RAID-5).

The biggest problem with this approach is that it only protects against hard disk failure. What about the plethora of different problems that can corrupt data as it is actually written to the file system by the operating system itself? These include:

- Accidental driver overwrites

- Bit rot

- Disk firmware bugs

- Driver & kernel buffer errors

- Misdirected writes

- Phantom writes

- Silent data corruption

Both ReFS and ZFS solve these problems at the file system level! More specifically, they both:

- Detect and repair data errors in real time

- Accommodate volumes in the thousands of disks

- Support huge numbers of files

- Allow you to modify/resize volumes without taking the volume offline

Now let’s take a more in-depth look at each one…..starting with ZFS.

Zettabyte File System (ZFS):

- Is a 128-bit filesystem

- Has a capacity of 256 quadrillion Zettabytes (1 Zettabyte = 1 billion Terabytes)

- Has been around for a long time (first introduced in 2001, officially released in 2005)

- Is multi-platform (Solaris, Mac OSX, Linux, FreeBSD, FreeNAS, and more)

- Supports nearly all file system features (Deduplication, snapshots, cloning, compression, encryption, NFSv4, volume management, etc.)

- Has checksums built into its entire fabric – it’s very obvious that ZFS was designed to implement checksums in a very efficient way at every level

ZFS Terminology:

- ZFS pool = bunch of physical disks (managed with the zpool command)

- ZFS file systems = volumes created from ZFS pools (managed with the zpool and zfs commands)

Creating ZFS volumes:

I did this on an Oracle UltraSPARC server running Solaris 10, but you can pretty much use any operating system that has ZFS support installed. You can give ZFS anything to use – you could give it a device file for a raw device, a partition, a volume, or even a file on a filesystem! For ease, I’m going to create 3 simple files off of the root of my system to use:

mkfile 256m /disk1

mkfile 256m /disk2

mkfile 256m /disk3

Next, I can create a simple ZFS volume from all three devices called lala, and automatically mount it to the /lala directory using a single command:

zpool create lala /disk1 /disk2 /disk3

I can then copy files to /lala or check out the details using the zpool command:

cp /etc/hosts /lala

ls -lh /lala

zpool list

I can also remove the ZFS volume entirely and return to my original configuration:

zpool destroy lala

Similarly, to create a mirror called po using just /disk1 and /disk2, and mount it to the /po directory, I could use:

zpool create po mirror /disk1 /disk2

zpool list

cp /etc/hosts /lala

zpool status po

If I overwrite part of /disk1 (simulating corruption) using the dd command, I can use the zpool scrub command to check for the error and then detach /disk1 (the failed disk) and attach /disk3 (a new disk). Finally, I’ll check out some more detailed I/O stats and remove the po volume:

dd if=/dev/random of=/disk1 bs=512 count=1

zpool scrub po

zpool status

zpool detach po /disk1

zpool status po

zpool attach po /disk2 /disk3

zpool list

zpool status po

zpool iostat -v po

zpool destroy po

To do a striped volume with parity, you can use the keyword raidz alongside the zpool command. RAID-Z is like RAID-5, but more intelligent because it uses a variable-sized stripe (hence the new name, RAID-Z)! Like RAID-5, RAID-Z requires a minimum of 3 devices. You can also use raidz2 (double parity like RAID-6, requires 4+ devices) and raidz3 (triple partity, requires 5+ devices). Let’s just create a regular RAID-Z volume called noonoo and check it out:

zpool create noonoo raidz /disk1 /disk2 /disk3

zpool status noonoo

zpool iostat -v noonoo

There is far more to ZFS than I’ll discuss here – you can resize/modify/whatever using various zpool commands. But what I’ll leave you with for now is that most of the cool features of the ZFS filesystem can be managed using the zfs command. For example, I could create three subdirectories under the /noonoo filesystem for three users using the zfs command (which is better than simply using mkdir to create them because it tells ZFS to keeps track of them):

zfs create noonoo/larry

zfs create noonoo/curly

zfs create noonoo/moe

zfs list

Now you could get a list of the properties for the /noonoo/larry subdirectory to see a list of things you could modify, or even set a quota for larry:

zfs get all noonoo/larry

zfs set quota=10G noonoo/larry

Resilient File System (ReFS):

- Support Windows Server 2012+ / Windows 8+ only

- Is a 64-bit filesystem

- Has a capacity = 1 Yottabyte (=1024 Zettabytes)

- Puts 64-bit checksums on all metadata

- Puts 64-bit checksums on data (if integrity attribute set)

- Has NO support for EFS, quotas, compression, 8.3 names, deduplication, etc. (Bitlocker is supported though)

ReFS Terminology:

- Storage pool = a bunch of physical disks

- Storage spaces = virtual disks / volumes made from storage pools (can be NTFS or ReFS)

Creating ReFS Volumes:

ReFS works with Storage Spaces to create either simple volumes from one or more disks, mirrored volumes (RAID-1, requires 2 devices), or parity volumes (RAID-5, requires 3+ devices). ReFS automatically corrects file corruption on a parity space if the the integrity attribute is set (it is set by default on parity volumes, but can be changed using the Set-FileIntegrity cmdlet in PowerShell).

Unfortunately, you can’t just create a file and give it to Storage Spaces for creating ReFS volumes – you actually have to use real devices. As a result, I’ve installed Windows Server 2012 R2 in a Hyper-V virtual machine and attached 3 additional 5GB virtual disks to it.

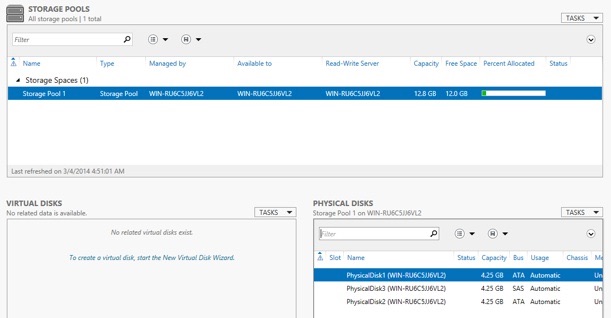

You can easily create a storage pool within Server Manager by navigating to File and Storage Services > Volumes > Storage Spaces. When you choose the appropriate Task option to create a Storage Space, you’ll get a wizard that asks you to choose the disks you want to put in the pool - you can then give it a name and complete the wizard. At this point, you’ll have something that looks like this:

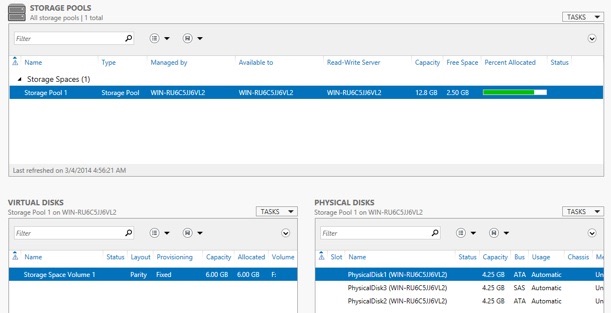

Next, you’ll want to choose the Tasks menu in the Virtual Disks section shown above and create a new Storage Space volume. This opens two wizards. The first wizard asks you to choose the pool you want to use, and then give the Storage Space a name, layout (Simple, Mirror or Parity) and size. The second wizard creates the filesystem on the Storage Space - it prompts you to choose your Storage Space, volume size, mount point (e.g. F:), and file system (choose ReFS!!!). And BOOM! You’re done. You should have a new volume listed under Virtual Disks:

OK, now for the performance comparison! I’ve had the pleasure of being able to run both file systems in a production-class test environment, and bog them down using various software suites. Here is what I’ve found from my experience:

ReFS Performance:

To say it is poor, would be very kind. The parity (RAID-5) has dismal performance, especially if you simulate corruption. Microsoft’s only solution to this is to add an SSD (it will move frequently-access stuff to the SSD – a feature called storage tier support). Why is this?!?!? Well, according to Microsoft, ReFS requires that existing parity information “…be read and processed before a new write can occur.” Archaic to say the least.

ZFS Performance:

Amazing would be an understatement. You throw anything at ZFS, and it seems to use it in the most efficent way possible (including PCIe SSDs and battery-backed RAM disk devices with ultra-low latency). There are also 3 levels of caching available with ZFS:

- ARC (Adaptive Replacement Cache), which is essentially intelligent memory caching

- L2ARC, which is non-storage SSD read caching

- ZIL (ZFS intent log), which is SSD write caching

More importantly, ZFS writes parity info with a variable-sized stripe. This feature almost entirely eliminates poor performance, AND corrects the infamous RAID 5 Hole (data lost in the event of a power failure).

Conclusion:

Both ZFS and ReFS detect and repair data errors without dismounting volumes, but ZFS does a much better job. ZFS uses checksums that trickle up through all data and metadata in the file system hierarchy. ReFS approximates this using the integrity attribute, but it has a huge hit on performance and very high latency. ZFS also supports more file system features and storage technologies (deduplication, PCI-e SSDs, Pro RAMdisks, compression, cloning, snapshots, encryption, and more).

Consequently, ZFS wins hands down. However, don’t write ReFS off just yet. Keep in mind that ZFS is very mature, and currently in production in massive enterprise environments. ReFS has just been introduced by Microsoft – it’s essentially a “1.0” feature that is rough around the edges. The important thing is that Microsoft wants a file system like ZFS, and it looks like they are moving in the right direction (which is a good thing).