RISCy business: The long and convoluted rise of today's dominant computing platform

RISC vs CISC

Traditionally, most computing platforms were built with the burden of software in mind. To ensure that a software program could be written easily and with fewer instructions, additional circuitry was added to the central processing unit (CPU) to compensate. This was referred to as complex instruction set computing (CISC). CISC architecture is still common today thanks to the x86 architecture used by Intel and AMD CPUs in most workstations and servers. Unfortunately, the added circuitry within CISC CPUs equated to added cost and complexity - both of which made it more expensive to evolve.

Reduced instruction set computing (RISC) started gaining traction in the 1980s as a way to solve the cost and complexity issues with CISC. Because RISC CPUs processed simpler instructions, they required less circuitry and were easier to create and evolve. Plus, for the same cost you could pack more circuitry into a RISC CPU compared to a CISC CPU. This meant that RISC CPUs could have more features and processing ability. The only downside of RISC compared to CISC was that the software programs (and compiler) had to be more complex to achieve the same task.

The first rise of RISC platforms

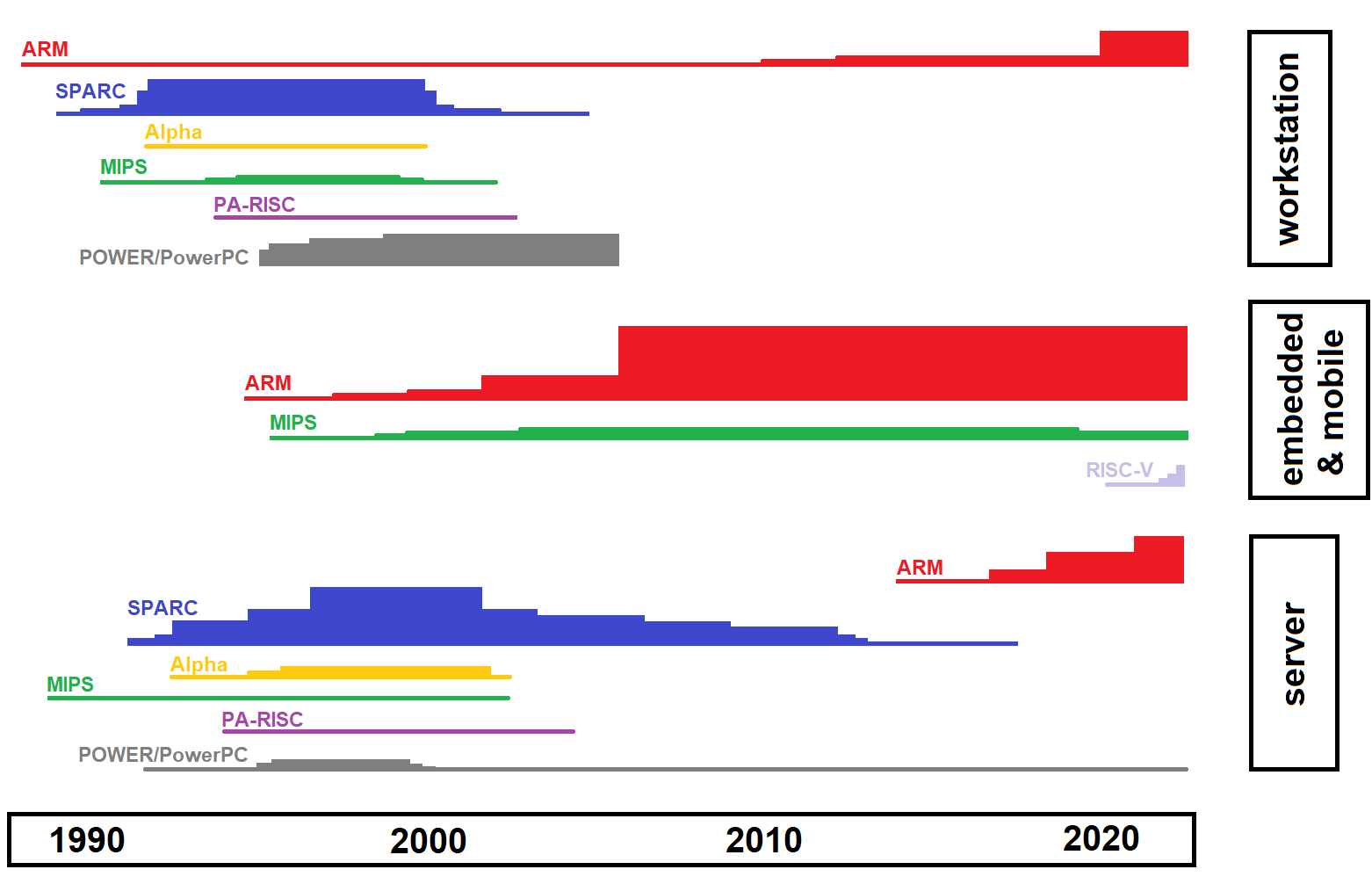

With the big push to RISC in the 1980s, the 1990s saw a flurry of RISC platforms. The most common of these included:

- SPARC (Scalable Processor Architecture), which was used by Sun Microsystems workstations and servers, as well as some Fujitsu servers. Sun was the dominant UNIX vendor of the 1990s and early 2000s.

- MIPS (Microprocessor without Interlocked Pipelined Stages), which was used by Silicon Graphics workstations and servers, and later within some game consoles, embedded hardware, and mobile devices.

- PA-RISC (Precision Architecture RISC), which was used in HP UNIX workstations and servers.

- Alpha, which was the most powerful RISC platform of its time and used in DEC workstations and servers running UNIX or VMS (including those that powered the AltaVista search engine during the dawn of the commercial Internet).

- POWER/PowerPC, which was used in IBM’s UNIX workstations and servers, as well in Apple Macintosh computers from the mid 1990s until the mid 2000s (when it was replaced by x86).

- ARM (Acorn RISC Machine), which was designed from ground up with power efficiency as the primary constraint, as illustrated in this video: https://www.youtube.com/watch?v=r2e-oJbngh0

The great RISC stagnation

The 1990s saw RISC primarily used in very expensive high-end UNIX workstations and servers, as well as some low-power embedded devices (ARM). But by 2000, x86 had such a tremendous market share in the workstation and server market that Intel and AMD were able to evolve their CISC platform at a much higher rate than RISC while keeping costs lower. In short, their roadmap left the high-end RISC platforms of the 1990s in the dust.

By the time Apple switched from PowerPC to x86 in 2005, most RISC platforms were already dead or used only in niche markets. The only RISC platform that survived the rise of x86 was ARM, and only for low-power devices like personal digital assistants (PDAs).

ARM comes out of nowhere to take the lead

By the late 2000s, the smartphone revolution was in full swing and powered by inexpensive ARM CPUs. After all, ARM’s design was all about low power, and mobile devices needed to maximize battery life. This led to a snowball effect that resulted in a plethora of Internet-connected devices collectively called “the Internet of things” (or IoT for short). Android TV boxes, smart speakers (e.g., Alexa, Google Home), smart appliances, smart watches, and more flooded the market during the 2010s - and all of it was powered by ARM.

By numbers alone, ARM was the #1 platform on the market - beating out x86 by a long shot - and it was RISC.

RISC moves into the server and workstation space

By the early 2010s, companies were experimenting with creating ARM server CPUs that had better performance per watt of power consumption compared to x86. After all, the biggest costs in any datacenter are for cooling and electricity, and ARM could save a lot of money in cloud workloads. Two notable examples of server-focused ARM CPUs include Amazon’s Graviton and Ampere’s Altra. Together, these two CPUs provide a significant portion of the cloud today. Even the world’s most powerful supercomputer in 2021 (Fugaku) used ARM CPUs.

At the same time, low-end Netbooks and Chromebooks hit the market with cheap ARM CPUs and a price point to match. This was particularly attractive to school boards, which ordered Chromebooks en masse. Unfortunately, anything better than “low-end” in the personal computer space didn’t have an ARM CPU until Apple brought out their powerful M1 ARM CPU in 2020. Nearly all Apple Mac computers since have shipped with ARM CPUs, and even Lenovo released a Windows 11 laptop running a Qualcomm Snapdragon ARM CPU in 2022 that performs very well.

New RISC platforms

As ARM vies for the top spot in the server and workstation market, a new RISC platform called RISC-V is starting to gain prominence in the embedded device market due to its clean design. And while we probably won’t ever see it in North America, China released its LoongArch RISC platform in 2021, which is largely based on MIPS but with RISC-V design features. LoongArch has variants that are geared towards all use cases (embedded/mobile, workstation, and server).

Summary

In short, RISC enjoyed popularity in the 1990s among high-end workstations and servers, as well as Apple Macintosh computers from the mid-1990s to mid-2000s. However, market competition from x86 killed that market until the rise of low-power mobile devices popularized the ARM RISC platform in the late 2000s. And as ARM continues to chew away market share from x86 across the workstation and server markets, the new RISC-V platform is starting to chew away at ARM’s original embedded device market.